Edge in the News: 2008

RESERVE ANOTHER LAUREL for Edward O. Wilson, the Pellegrino University Professor emeritus at Harvard, serial Pulitzer winner, and prominent intellectual: online celebrity.

Forget Charlie Rose - Wilson has Google for a soapbox. Amid the amateur-hour piffle of YouTube "talent" and skateboarding dogs, the famed biologist stands in bold relief, with more than 500 Google video search results to his credit: Interviews ranging far afield of TV shows to a spate of appearances on several Web-only video platforms such as Meaningoflife.tv,Bigthink.com, Fora.tv, and the online home of the Technology Entertainment Design (TED) conference.

It was through a TED presentation that Wilson chose to unveil his proposal for the Encyclopedia of Life, a Wikipedia of biodiversity, and a few short months later he secured the funding necessary to launch it. Hitting the talk show circuit never looked so passe.

The rise this year of a host of new Web video sites targeting high-minded, edifying content suggests that today's marketplace of ideas is rapidly moving online. "The Last Lecture," a 76-minute video by the late engineering professor Randy Pausch recorded late last year, became a crossover phenomenon - viewed by at least 7 million - and easily one of the most widely watched academic events in history. The buzzy presentation shorts of TED surged past 50 million viewings on only their second birthday.

Newly minted video start-ups Fora.tv and Bigthink.com, boasting auspicious programming starring top-shelf public intellectuals, each pledged this year to become a thinking person's YouTube: With combined inventories in the tens of thousands of clips and numerous partnerships with major media properties, that viewership is only expanding. And iTunes U., a multimedia channel of free higher education content at the iTunes Store, continued to amass its increasingly Amazonian stockpile of labs and lectures from schools around the world.

From interviews with obscure geniuses to splashy marquee names, and from hoary conference proceedings and drowsy first-year survey classes to passionate debates at exclusive, invite-only affairs like the Aspen Institute, an entire back catalog of cerebral Web video is steadily accumulating online. How do the various offerings rate?

The Oprah: TED

The TED Talks program single-handedly popularized the phenomenon of brainy programming. It's an online repository of zippy, often provocative presentations delivered by speakers at the eponymous conference. Topics range widely across the arts and sciences: inventors in today's Africa, the nature of happiness, and an evolutionary theory of technology.

TED has become, in no small part due to its videos' viral popularity, a high rooftop in academia for world authorities to report on the latest thinking in their fields, an Oprah of the intelligentsia. Like Oprah, it wields considerable kingmaking power through its presentation schedule, whose speakers graduate to greater buzz, and sometimes lasting celebrity, in the wider media.

The reason TED videos work? Their winning production values and packaging translate so well online. Even the stuffiest subject - say, Swedish global health professor Hans Rosling talking about how to visualize statistics - proves, through the meticulously observed conventions of a TED presentation (brisk pacing, humor, strong visuals), a reliably entertaining break from the tedium and rigors of high church academic discourse. TED is not a venue for speakers to go deep on a subject; instead, it's one for teasing out the more bravura elements of their work.

The Public Broadcaster: Fora.tv

Amassing video from public events and colloquia around the world, the online video storehouse Fora.tv is the wide angle lens to TED's close-up. Giving up a TED-like uniform polish for the capaciousness of its collection, Fora.tv is quickly expanding its inventory through a deep roster of partners: a number of schools, the New Republic, Brookings Institution, the Oxonian Society, the Long Now Foundation, the Hoover Foundation, and the Aspen Institute.

The speakers themselves include a wide range of Nobel laureates, cultural notables, politicians, and policy wonks - but also a good deal of unexpected surprises. Settle in for a lively food fight between Christopher Hitchens and the Rev. Al Sharpton, tussling over religion at the New York Public Library, and then rove over to see Christian Lander, author of the blog Stuff White People Like, gawking meekly and stopping to photograph the large crowd attending his Washington, D.C., reading.

Fora.tv's features are more specialized than the others, including the ability for users to compile their own video libraries, as well to upload and integrate their own contributions, public access cable-style. Production quality hardly warrants demerits in the face of the catalog collected here - one could sink into this C-SPAN of the public sphere for days of unexpected viewing.

The Talk Show: Bigthink.com

For those who think small is better, the video clip library of Bigthink.comdelivers. Its shorts are studio-shot, first-person interviews. Each clip features the interviewee answering a single question or waxing on a single topic: for example, UCLA law professor Kal Raustiala explaining his "piracy paradox," the puzzle that intellectual property protection may be inhibiting creative progress in culture and industry.

Like TED Talks, Bigthink.com video can be searched by themes and ideas as well as speakers. It also provides text transcripts, which are easy to scan and search for those just researching.

Its edge is in the notoriety of its interviewees - world leaders, presidential candidates, and prestigious thinkers - combined with its prolific production schedule. It maintains the clip of new content one would expect of a daily television show, not an Internet video site. Still, for longer sittings, its quick-take editing style can feel thin.

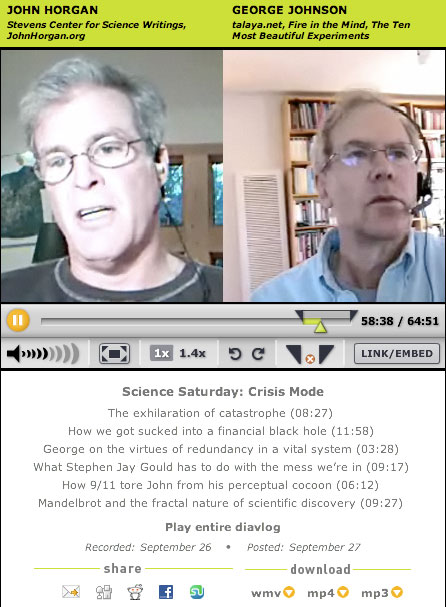

The Op/Ed: Bloggingheads.tv

Following from an earlier video series concept, Meaningoflife.tv, the cultural critic Robert Wright created with prominent blogger Mickey Kaus a head-to-head video debate series called Bloggingheads.tv. Advertisements tout it as being like "Lincoln-Douglas . . . with lower production values."

Nearly every day, it publishes a new "diavlog", or two-way video debate, and chapters allow users to surf quickly between conversation points. The diavlog is a millennial take on another press mainstay, the opinion editorial. (Disclosure: Bloggingheads.tv has a partnership with the New York Times Co. through the Times' opinion pages online. The Globe is owned by the New York Times Co.)

The roster of speakers is heavy on journalists and public-policy types, and the daily subject matter skews - no doubt, somewhat wearingly for some of us - toward headline political news and opinion. While Wright and Kaus star as the mainstays, the stable of commentators is populous enough to keep Bloggingheads.tv from the whiff of celebrity - the topic is always the star.

Graduate Studies: Edge.org

For those seeking substance over sheen, the occasional videos released atEdge.org hit the mark. The Edge Foundation community is a circle, mainly scientists but also other academics, entrepreneurs, and cultural figures, brought together by the literary agent John Brockman.

Edge's long-form interview videos are a deep-dive into the daily lives and passions of its subjects, and their passions are presented without primers or apologies. It is presently streaming excerpts from a private lecture, including a thoughtful question and answer session, by Nobel laureate Daniel Kahneman to Edge colleagues on the importance of behavioral economics.

It won't run to everyone's tastes. Unvarnished speakers like Sendhil Mullainathan, a MacArthur recipient with intriguing insights on poverty, are filmed in casual lecture, his thoughts unspooling in the mode of someone not preoccupied with clarity or economy of expression. The text transcripts are helpful in this context.

Regardless, the decidedly noncommercial nature of Edge's offerings, and the egghead imprimatur of the Edge community, lend its videos a refreshing air, making one wonder if broadcast television will ever offer half the off-kilter sparkle of their salon chatter.

And as for Charlie Rose? Perhaps he's voted with his feet. Excerpts of his PBS show are now streaming daily at the online magazine Slate.

Jeffrey MacIntyre (jeffmacintyre.com), who writes on culture, science and technology, is also a consultant to digital publishers (predicate-llc.com). He lives in New York.

Correction: An article about video lectures ("U Tube") in the Ideas section of Sunday, Nov. 2, misidentified Edward O. Wilson of Harvard University. He is a biologist.![]()

I was watching a PBS production the other day entitled Dogs That Changed the World, and wondered about our contemporary fascination with things "That Changed the World."

The Machine That Changed the World (a 1991 book about automotive mass production). Cod: A Biography of The Fish That Changed the World (a 1998 book about, well, cod). The Map That Changed The World (2002 book about geologist William Smith). 100 Photographs That Changed the World (Life, 2003). Bridges That Changed the World (book, 2005). The Harlem Globetrotters: The Team That Changed the World (book, 2005). How William Shatner Changed the World (documentary, 2006). Genius Genes: How Asperger Talents Changed the World (book on brilliant people with autism, 2007). The Book That Changed the World (2008 article in the Guardian, about The Origin of Species).

This "Changed the World" stuff is getting to be a bit tedious, isn't it? Now that we have Dogs That Changed the World, can Cats That Changed the World be far behind? ...

...Bill Bean notes that there is already a place to read about People Who Changed the World and Then Changed Their Minds. Every year, the people at the Edge Foundation ask writers, thinkers, psychologists, historians and others what major ideas they have changed their minds about. Go to www.edge.org. It's good reading.

“BEWARE of geeks bearing formulas.” So saith Warren Buffett, the Wizard of Omaha. Words to bear in mind as we bail out banks and buy up mortgages and tweak interest rates and nothing, nothing seems to make any difference on Wall Street or Main Street. Years ago, Mr. Buffett called derivatives “weapons of financial mass destruction” — an apt metaphor considering that the Manhattan Project’s math and physics geeks bearing formulas brought us the original weapon of mass destruction, at Trinity in New Mexico on July 16, 1945.

In a 1981 documentary called “The Day After Trinity,” Freeman Dyson, a reigning gray eminence of math and theoretical physics, as well as an ardent proponent of nuclear disarmament, described the seductive power that brought us the ability to create atomic energy out of nothing.

“I have felt it myself,” he warned. “The glitter of nuclear weapons. It is irresistible if you come to them as a scientist. To feel it’s there in your hands, to release this energy that fuels the stars, to let it do your bidding. To perform these miracles, to lift a million tons of rock into the sky. It is something that gives people an illusion of illimitable power, and it is, in some ways, responsible for all our troubles — this, what you might call technical arrogance, that overcomes people when they see what they can do with their minds.”

The Wall Street geeks, the quantitative analysts (“quants”) and masters of “algo trading” probably felt the same irresistible lure of “illimitable power” when they discovered “evolutionary algorithms” that allowed them to create vast empires of wealth by deriving the dependence structures of portfolio credit derivatives.

What does that mean? You’ll never know. Over and over again, financial experts and wonkish talking heads endeavor to explain these mysterious, “toxic” financial instruments to us lay folk. Over and over, they ignobly fail, because we all know that no one understands credit default obligations and derivatives, except perhaps Mr. Buffett and the computers who created them.

Somehow the genius quants — the best and brightest geeks Wall Street firms could buy — fed $1 trillion in subprime mortgage debt into their supercomputers, added some derivatives, massaged the arrangements with computer algorithms and — poof! — created $62 trillion in imaginary wealth. It’s not much of a stretch to imagine that all of that imaginary wealth is locked up somewhere inside the computers, and that we humans, led by the silverback males of the financial world, Ben Bernanke and Henry Paulson, are frantically beseeching the monolith for answers. Or maybe we are lost in space, with Dave the astronaut pleading, “Open the bank vault doors, Hal.”

As the current financial crisis spreads (like a computer virus) on the earth’s nervous system (the Internet), it’s worth asking if we have somehow managed to colossally outsmart ourselves using computers. After all, the Wall Street titans loved swaps and derivatives because they were totally unregulated by humans. That left nobody but the machines in charge.

How fitting then, that almost 30 years after Freeman Dyson described the almost unspeakable urges of the nuclear geeks creating illimitable energy out of equations, his son, George Dyson, has written an essay (published at Edge.org) warning about a different strain of technical arrogance that has brought the entire planet to the brink of financial destruction. George Dyson is an historian of technology and the author of “Darwin Among the Machines,” a book that warned us a decade ago that it was only a matter of time before technology out-evolves us and takes over.

His new essay — “Economic Dis-Equilibrium: Can You Have Your House and Spend It Too?” — begins with a history of “stock,” originally a stick of hazel, willow or alder wood, inscribed with notches indicating monetary amounts and dates. When funds were transferred, the stick was split into identical halves — with one side going to the depositor and the other to the party safeguarding the money — and represented proof positive that gold had been deposited somewhere to back it up. That was good enough for 600 years, until we decided that we needed more speed and efficiency.

Making money, it seems, is all about the velocity of moving it around, so that it can exist in Hong Kong one moment and Wall Street a split second later. “The unlimited replication of information is generally a public good,” George Dyson writes. “The problem starts, as the current crisis demonstrates, when unregulated replication is applied to money itself. Highly complex computer-generated financial instruments (known as derivatives) are being produced, not from natural factors of production or other goods, but purely from other financial instruments.”

It was easy enough for us humans to understand a stick or a dollar bill when it was backed by something tangible somewhere, but only computers can understand and derive a correlation structure from observed collateralized debt obligation tranche spreads. Which leads us to the next question: Just how much of the world’s financial stability now lies in the “hands” of computerized trading algorithms?

•

Here’s a frightening party trick that I learned from the futurist Ray Kurzweil. Read this excerpt and then I’ll tell you who wrote it:

But we are suggesting neither that the human race would voluntarily turn power over to the machines nor that the machines would willfully seize power. What we do suggest is that the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines’ decisions. ... Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently. At that stage the machines will be in effective control. People won’t be able to just turn the machines off, because they will be so dependent on them that turning them off would amount to suicide.

Brace yourself. It comes from the Unabomber’s manifesto.

Yes, Theodore Kaczynski was a homicidal psychopath and a paranoid kook, but he was also a bloodhound when it came to scenting all of the horrors technology holds in store for us. Hence his mission to kill technologists before machines commenced what he believed would be their inevitable reign of terror.

•

We are living, we have long been told, in the Information Age. Yet now we are faced with the sickening suspicion that technology has run ahead of us. Man is a fire-stealing animal, and we can’t help building machines and machine intelligences, even if, from time to time, we use them not only to outsmart ourselves but to bring us right up to the doorstep of Doom.

We are still fearful, superstitious and all-too-human creatures. At times, we forget the magnitude of the havoc we can wreak by off-loading our minds onto super-intelligent machines, that is, until they run away from us, like mad sorcerers’ apprentices, and drag us up to the precipice for a look down into the abyss.

As the financial experts all over the world use machines to unwind Gordian knots of financial arrangements so complex that only machines can make — “derive” — and trade them, we have to wonder: Are we living in a bad sci-fi movie? Is the Matrix made of credit default swaps?

When Treasury Secretary Paulson (looking very much like a frightened primate) came to Congress seeking an emergency loan, Senator Jon Tester of Montana, a Democrat still living on his family homestead, asked him: “I’m a dirt farmer. Why do we have one week to determine that $700 billion has to be appropriated or this country’s financial system goes down the pipes?”

“Well, sir,” Mr. Paulson could well have responded, “the computers have demanded it.”

Internet ya es para muchos el mayor canal de información. Cada vez es superior el tiempo empleado en navegar, ya sea para leer las noticias, revisar el correo, ver vídeos y escuchar música, consultar enciclopedias, mapas, conversar por teléfono y escribir blogs. En definitiva, la Red filtra gran parte de nuestro acceso a la realidad. El cerebro humano se adapta a cada nuevo cambio e Internet supone uno sin precedentes. ¿Cuál va a ser su influencia? Los expertos están divididos. Para unos, podría disminuir la capacidad de leer y pensar en profundidad. Para otros, la tecnología se combinará en un futuro próximo con el cerebro para aumentar exponencialmente la capacidad intelectual.

Uno de los más recientes en plantear el debate ha sido el ensayista estadounidense Nicholas G. Carr, experto en Tecnologías de la Información y la Comunicación (TIC), y asesor de la Enciclopedia británica. Asegura que ya no piensa como antes. Le sucede sobre todo cuando lee. Antes se sumergía en un libro y era capaz de zamparse páginas y páginas hora tras hora. Pero ahora sólo aguanta unos párrafos. Se desconcentra, se inquieta y busca otra cosa que hacer. "La lectura profunda que solía suceder de forma natural se ha convertido en un esfuerzo", señala Carr en el provocador artículo Is Google making us stupid? (¿Está Google volviéndonos tontos?), publicado en la revista The Atlantic. Carr achaca su desorientación a una razón principal: el uso prolongado de Internet. Está convencido de que la Red, como el resto de medios de comunicación, no es inocua. "[Los medios] Suministran el material del pensamiento, pero también modelan el proceso de pensar", insiste.

"Creo que la mayor amenaza es su potencial para disminuir nuestra capacidad de concentración, reflexión y contemplación", advierte Carr, a través del correo electrónico. "Mientras Internet se convierte en nuestro medio universal, podría estar readiestrando nuestros cerebros para recibir información de manera muy rápida y en pequeñas porciones", añade. "Lo que perdemos es nuestra capacidad para mantener una línea de pensamiento sostenida durante un periodo largo".

El planteamiento de Carr ha suscitado cierto debate en foros especializados, como en la revista científica online Edge.org, y de hecho no es descabellado. Los neurólogos sostienen que todas las actividades mentales influyen a un nivel biológico en el cerebro; es decir, en el establecimiento de las conexiones neuronales, la compleja red eléctrica en la que se forman los pensamientos. "El cerebro evolucionó para encontrar pautas. Si la información se presenta en una forma determinada, el cerebro aprenderá esa estructura", detalla desde Londres Beau Lotto, profesor de neurociencia en el University College de Londres. Y añade una precisión: "Luego habría que ver si el cerebro aplica esa estructura en el modo de comportarse frente a otras circunstancias; no tiene por qué ser así necesariamente, pero es perfectamente posible".

Lo que queda por ver es si esta influencia va a ser negativa, como vaticina Carr, o si va a ser el primer paso para integrar la tecnología en el cuerpo humano y ampliar las capacidades del cerebro, como predice el inventor y experto en inteligencia artificial Raymond Kurzweil. "Nuestras primeras herramientas ampliaron nuestro alcance físico, y ahora extienden nuestro alcance mental. Nuestros cerebros advierten de que no necesitan dedicar un esfuerzo mental (y neuronal) a aquellas tareas que podemos dejar a las máquinas", razona Kurzweil desde Nueva Jersey. Y cita un ejemplo: "Nos hemos vuelto menos capaces de realizar operaciones aritméticas desde que las calculadoras lo hacen por nosotros hace ya muchas décadas. Ahora confiamos en Google como un amplificador de nuestra memoria, así que de hecho recordamos peor las cosas que sin él. Pero eso no es un problema porque no tenemos por qué prescindir de Google. De hecho, estas herramientas se están volviendo más ubicuas, y están disponibles todo el tiempo".

Oponer cerebro y tecnología es un enfoque erróneo, según coincide con Kurzweil el profesor JohnMcEneaney, del Departamento de Lectura y Artes lingüísticas de la Universidad de Oakland (EE UU). "Creo que la tecnología es una expresión directa de nuestra cognición", discurre McEneaney. "Las herramientas que empleamos son tan importantes como las neuronas de nuestros cráneos. Las herramientas definen la naturaleza de la tarea para que las neuronas puedan hacer el trabajo".

Carr insiste en que esta influencia será mucho mayor a medida que aumente el uso de Internet. Se trata de un fenómeno incipiente que la neurología y la psicología tendrán que abordar a fondo, pero de momento un informe pionero sobre hábitos de búsqueda de información en Internet, dirigido por expertos del University College de Londres (UCL), indica que podríamos hallarnos en medio de un gran cambio de la capacidad humana para leer y pensar.

El estudio observó el comportamiento de los usuarios de dos páginas web de investigación, uno de la British Library y otro del Joint Information Systems Comittee (JISC), un consorcio educativo estatal que proporciona acceso a periódicos y libros electrónicos, entre otros recursos. Al recopilar los registros, los investigadores advirtieron que los usuarios "echaban vistazos" a la información, en vez de detenerse en ella. Saltaban de un artículo a otro, y no solían volver atrás. Leían una o dos páginas en cada fuente y clicaban a otra. Solían dedicar una media de cuatro minutos por libro electrónico y ocho minutos por periódico electrónico. "Está claro que los usuarios no leenonline en el sentido tradicional; de hecho, hay indicios de que surgen nuevas formas de lectura a medida que los usuarios echan vistazos horizontalmente a través de títulos, páginas y resúmenes en busca de satisfacciones inmediatas", constata el documento. "Casi parece que se conectan a la Red para evitar leer al modo tradicional".

Los expertos inciden en que se trata de un cambio vertiginoso. "La Red ha provocado que la gente se comporte de una manera bastante diferente con respecto a la información. Esto podría parecer contradictorio con las ideas aceptadas de la biología y la psicología evolutivas de que el comportamiento humano básico no cambia de manera súbita", señala desde Londres el profesor David Nicholas, de la Facultad de Información, Archivos y Bibliotecas del UCL. "Hay un consenso general en que nunca habíamos visto un cambio a esta escala y rapidez, así que éste podría muy bien ser el caso [de un cambio repentino]", añade, citando su ensayo Digital consumers.

Se trata de una transformación sin precedentes porque es un nuevo medio con el potencial de incluir a todos los demás. "Nunca un sistema de comunicaciones ha jugado tantos papeles en nuestras vidas ?o ejercido semejante influencia sobre nuestros pensamientos? como Internet hace hoy", incide Carr. "Aun así, a pesar de todo lo que se ha escrito sobre la Red, se ha prestado poca atención a cómo nos está reprogramando exactamente".

Esta alteración de las maneras de buscar información y de leer no sólo afectaría a los más jóvenes, a los que se les supone mayor número de horas conectado, sino a individuos de todas las edades. "Lo mismo les ha sucedido a maestros, profesores y médicos de cabecera. Todo el mundo muestra un comportamiento de saltos y lecturas por encima", precisa el informe.

Carr insiste en que una de las cuestiones clave es el modo de lectura "superficial" que va ganando terreno. "En los tranquilos espacios abiertos por la lectura de un libro, sostenida y sin distracciones, o por cualquier otro acto de contemplación, establecemos nuestras propias asociaciones, extraemos nuestras propias inferencias y analogías, y damos luz a nuestras propias ideas". El problema es que al impedir la lectura profunda se impide el pensamiento profundo, ya que uno es indistinguible del otro, según escribe Maryanne Wolf, investigadora de la lectura y el lenguaje de la Tufts University (EE UU) y autora deCómo aprendemos a leer (Ediciones B). Su preocupación es que "la información sin guía pueda crear un espejismo de conocimiento y, por ello, restrinja los largos, difíciles y cruciales procesos de pensamiento que llevan al conocimiento auténtico", señala Wolf desde Boston.

Más allá de las advertencias sobre los hipotéticos efectos de Internet sobre la cognición, científicos como Kurzweil dan la bienvenida a esta influencia: "Cuanto más confiamos en la parte no biológica (es decir, las máquinas) de nuestra inteligencia, la parte biológica trabaja menos, pero la combinación total aumenta su inteligencia". Otros discrepan de esta predicción. La mayor dependencia de la Red conllevaría que el usuario se vuelva vago y, entre otras costumbres adquiridas, confíe completamente en los motores de búsqueda como si fueran el grial. "Lo utilizan como una muleta", señala el profesor Nicholas, que recela de que esa herramienta sirva para liberar al cerebro de las tareas de búsqueda para poder emplearse en otras.

Carr va más allá y asegura que el tipo de lectura "vistazo" beneficia a las empresas. "Sus ingresos aumentan a medida que pasamos más tiempo conectados y que aumentamos el número de páginas y de los elementos de información que vemos", razona. "Las empresas tienen un gran interés económico en que aumentemos la velocidad de nuestra ingesta de información", añade. "Eso no significa que deliberadamente quieran que perdamos la capacidad de concentración y contemplación: es sólo un efecto colateral de su modelo de negocio".

Otros expertos matizan bastante el pronóstico de Carr. El experto en tecnología Edward Tenner, autor de Our own devices: how technology remake humanity (Nuestros propios dispositivos: cómo la tecnología rehace a la humanidad), se suma a la crítica de Carr pero añade que no tiene por qué ser irreversible. "Coincido con la preocupación por el uso superficial de Internet, pero lo considero como un problema cultural reversible a través de una mejor enseñanza y un mejor software de búsqueda, y no como una deformación neurológica", explica desde Nueva Jersey (EE UU). "Sucede como con la gente que está acostumbrada a los coches y a las tumbonas pero entiende la importancia de hacer ejercicio".

En definitiva, científicos como Kurzweil destacan el potencial de Internet como herramienta de conocimiento. "La Red ofrece la oportunidad de albergar toda la computación, el conocimiento y la comunicación que hay. Al final, excederá ampliamente la capacidad de la inteligencia humana biológica. Y concluye: "Una vez que las máquinas puedan hacer todo lo que hacen los humanos, será una conjunción poderosa porque se combinará con los modos en los que las máquinas ya son superiores. Pero nos mezclaremos con esta tecnología para hacernos más inteligentes".

At last, we have a black swan. The credit crisis began last year soon after the publication of Nassim Nicholas Taleb's bestselling Black Swan, which tackled the impact of unexpected events, such as the discovery of black swans in Australia by explorers who had thought all swans were white. ...

...Prediction markets, summing the market's wisdom, had it wrong. Last week, the Intrade market put the odds that the Tarp would have passed by now at more than 90 per cent.

Models using market extremes to predict political interventions were also fooled. When volatility rises as high as in the past few weeks, it has in the past been a great bet that the government will do something—which is in part why spikes in volatility tend to be great predictors of a subsequent bounce.

Taleb himself suggested recently that investors should rely least on normal statistical methods when they are in the "fourth quadrant"—when there is a complex range of possible outcomes and where the distribution of responses to those outcomes does not follow a well understood pattern.

Investors were in that quadrant on Monday morning. They were vulnerable to black swans and should not have relied on statistics as a guide.

One prediction for the future does look safe, however: investors will spend much more time making qualitative assessments of political risk.

JOHNSON: To get back to Taleb again, obviously, this piece on Edge is really food for thought. He mentioned Manderbrot sets and fractals and power laws where you have a rare number of extreme events and a lot of smaller less extreme events but his point underling this is that he didn't believe for a moment that these mathematical models actually explained reality or financial market place reality in any case but they are ways to think about it, ways to get a handle on it, but basically, it's too complex for us to understand.

I found that very rereshing since the thing that strikes me sometimes about the universe when we get to the ultimate questions is that we have these wonderful tools that are very helpful but you can't mistake the map for the reality—that old saw—the map for the territory.

HORGAN: We're all bozos on this bus....

295 Gramm für 359 Dollar: Das Lesegerät Kindle speichert bis zu 200 Bücher

Als am 21. Juli 2007 der siebte und letzte Band der Harry-Potter-Reihe erschien, wurden innerhalb von vierundzwanzig Stunden mehr als zehn Millionen Exemplare verkauft. Man sprach von einer logistischen Meisterleistung und dem Triumph eines altehrwürdigen Mediums, dem seit vielen Jahren immer mal wieder sein bevorstehendes Ende verkündet wird. Nie zuvor in der jahrhundertealten Geschichte des Buchdrucks hatte sich ein einzelnes Buch mit einer solchen Geschwindigkeit verbreitet. Aber was wäre geschehen, wenn jedermann sich das Werk als elektronisches Buch im Internet hätte herunterladen können? Wie viele Menschen hätten von dieser Möglichkeit Gebrauch gemacht - zwanzig Millionen, vielleicht fünfzig Millionen? Wie groß die Zahl auch sein mag, für den traditionellen Buchhandel, für den nur noch ein Potter-Jahr ein gutes Jahr ist, beschreibt sie ein Katastrophenszenario. Denn der Vertrieb der e-books findet ausschließlich im Internet statt, die stationären Buchhandlungen können daran nichts verdienen.

Das ist nur einer von vielen Gründen, weshalb der Kindle, wie Amazon sein neues elektronisches Lesegerät getauft hat, neben Begeisterung über den technischen Fortschritt auch große Befürchtungen auslöst. Denn erstmals seit der Einführung solcher Lesegeräte vor etwa zehn Jahren drohen der Kindle und seine Artgenossen wie Sonys Portable Reader, das Cybook oder der iLiad dem gedruckten Buch ernsthafte Konkurrenz zu machen. Dank einer neuen Bildschirmtechnologie, die mit einer Art elektronischer Tinte arbeitet, lässt sich mit den neuen Geräten genauso gut lesen wie am Computerbildschirm und das sogar bei Tageslicht im Freien.

Elektronisches Potter-Feuer im Keim erstickt

Man könnte diese Geräte als nützliche Hilfsmittel abtun, als digitale Lastenesel und profane Alternative zum schönen Buch. Schließlich ist es ja wirklich bequem, wenn man auf Reisen eine veritable Handbibliothek mit mehreren hundert Bänden in einem kleinen, eher hässlichen Plastikkästchen gespeichert hat, das indes kaum größer und schwerer ist als ein Taschenbuch und überdies noch so nützliche Funktionen wie eine Volltextsuche anbietet. Aber während die einen freudig vom größten evolutionären Schritt seit Erfindung des Buchdrucks sprechen, fragen sich die anderen besorgt, welche Umwälzungen dem wichtigsten Medium der Kulturgeschichte bevorstehen könnten. Bislang scheint nur eines sicher: All jene, die mit dem Buch zu tun haben, ob sie es schreiben, drucken, binden, verlegen, durchs Land transportieren, verkaufen oder lesen, dürften auf die oder andere Weise von der neuen Technologie berührt werden.

Wörtlich übersetzt bedeutet „to kindle“ so viel wie entzünden oder entflammen. Joanne K. Rowling hat das elektronische Potter-Feuer, das der Kindle entfachen könnte, im Keim erstickt, als sie entschied, dass ihre Werke nicht als e-book erscheinen dürfen. Über die Beweggründe der ehemaligen Lehrerin wird heftig spekuliert, auch auf der Website von Amazons Abteilung für e-books, im „Kindle Store“. Potter-Fans, die zugleich Kindle-Fans sind, machen dort ihrem Ärger Luft, verdächtigen die Autorin anti-amerikanischer Absichten oder bezeichnen sie schlicht als „Inkarnation des Bösen“.

Droht der Buchbranche das Schicksal der Musikindustrie?

Seit einigen Tagen ist dort der Beitrag eines Mannes zu lesen, von dem nichts bekannt ist außer seinem Namen, und der ist womöglich erfunden. Wir wissen nichts über John Newton, außer dass er einen Kindle besitzt und all jenen seine Stimme leiht, die der vielleicht auflagenstärksten Schriftstellerin der Welt das Recht an ihrem geistigen Eigentum absprechen wollen. Denn John Newton vertritt die Ansicht, dass es ganz und gar bedeutungslos sei, ob Joanne K. Rowling ihre Zustimmung dazu gibt, dass die Harry-Potter Bücher als e-books erscheinen oder nicht. Sein einziges Argument ist von brutaler Nüchternheit: „Es gibt diese e-books bereits.“ Die Schriftstellerin könne nur noch darüber entscheiden, ob sie ihren Lesern die Möglichkeit erlauben möchte, ein e-book von Harry Potter auch auf „legalem Wege“ zu erlangen.

Das ist, kaum verhüllt, ein Aufruf zum Raubkopieren, jener Form der alltäglichen Internetpiraterie, die die Musikbranche nach dem Aufkommen des i-Pod an den Rand des Untergangs gebracht hat. Droht der Buchbranche jetzt ein ähnliches Schicksal?

Die schönste Erfindung der Welt

„Was ist schlecht am e-book?“, fragt der türkische Nobelpreisträger Orhan Pamuk und möchte sogleich wissen, wie viele Kindle-Benutzer es in Deutschland bereits gibt. Sein Agent rate zwar entschieden zur Zurückhaltung, aber er selbst habe im Prinzip keine Einwände. Pamuk ist ein internationaler Autor. Er hat in Deutschland oder den Vereinigten Staaten fast ebenso viele Leser wie in der Türkei. Neuen Märkten gegenüber zeigt er sich aufgeschlossen: „Das e-book wird sehr wahrscheinlich auch nichts anderes sein als eine weitere Ergänzung zum Hardcover, ähnlich wie das Taschenbuch oder Hörbücher. Ich hätte nichts dagegen, wenn ich auf diesem Wege vielleicht noch einmal 100.000 zusätzliche Leser finde.“ Dass der Kindle Store bereits vier seiner Titel anbietet, scheint der Nobelpreisträger nicht zu wissen. Vielen Schriftstellern, die dort vertreten sind, dürfte es nicht anders gehen.

Pamuks deutscher Verleger ist entschieden anderer Ansicht als sein Autor. Michael Krüger, seit vier Jahrzehnten in der deutschen Buchbranche tätig und seit langem eine ihrer prägenden Gestalten, kann sich nicht vorstellen, wie jemand „freiwillig auf die schönste Erfindung der Weltgeschichte“ verzichten könne: „Das Buch ist das einzige Objekt unserer Zivilisation, auf das wir wirklich stolz sein können. Wenn es sich jetzt in einen multifunktionalen Speicher verwandeln soll, dann entspricht das dem Lauf der Zeit, der aus unserer Zivilisation eine elektronische Hölle machen will. Also muss man widerstehen. Aber da diese Dinge nicht aus der Welt zu schaffen sind und geistiges Eigentum sich sowieso verflüchtigt in den elektronischen Netzen, werden wir natürlich unsere Rechte lizenzieren.“ Wie und zu welchen Konditionen das geschehen könne, darüber ist sich Krüger bislang ebenso im Unklaren wie seine Kollegen bei Suhrkamp oder dem S. Fischer Verlag. Wohin man in diesen Tagen auch hört in der deutschen Verlagswelt, ob es um Belletristik, Sachbücher oder Kinderbücher geht, überall klingt die Auskunft ganz ähnlich wie bei Carlsen, Joanne K. Rowlings deutschem Verlag: Man habe gerade erst begonnen, sich mit der Sache zu befassen.

Wenn das Medium mit Macht ruft

Dabei ist der Kindle in den Vereinigten Staaten seit zehn Monaten auf dem Markt. Amazon soll dort bislang etwa 300 000 Geräte verkauft haben und bietet zur Zeit 166.000 elektronische Titel an, Neuerscheinungen, Bestseller, Klassiker sowie einige Magazine und Tageszeitungen. Die Preise reichen von 99 Cent für einen Klassiker wie Emily Dickinsons Gedichte bis zu 9,99 Dollar für einen aktuellen Bestseller. Orhan Pamuk meint, dass die Autoren in der virtuellen Welt des e-books künftig besser vergütet werden müssten als in der realen Welt, wo sie in der Regel zwischen zehn und fünfzehn Prozent des Verkaufspreises ihrer Bücher erhalten. Schließlich, so Pamuk, fielen beim e-book für die Verlage weit geringere Kosten für Produktion und Vertrieb der Bücher an. Michael Krüger sieht das naturgemäß anders: „Wir alle machen den größten Teil unseres Umsatzes mit dem Hardcover. Wenn dort der Umsatz halbiert wird, weil das e-book nur halb so viel kostet wie das gedruckte Buch, schrumpft früher oder später jeder Verlag auf die Hälfte seiner jetzigen Größe.“

Aber das e-book droht beileibe nicht nur die derzeitigen ökonomischen Grundlagen unserer Buchkultur in Frage zu stellen. Es könnte den Lesevorgang an sich verändern. Als hätten Amazons Ingenieure Heideggers Satz, dass die Technik selbst jede Erfahrung ihres Wesens verhindere, im Hinterkopf gehabt, betreiben sie entschieden Mimesis mit dem Buch. Der Kindle steckt in einem Lederetui wie zwischen zwei Buchdeckeln, und sein Bildschirm zeigt Seite für Seite nichts anderes als das gedruckte Buch auch. Vittorio Klostermann, der Verleger Heideggers, benutzt selbst ein elektronisches Lesegerät, wenn er auf Reisen ist: „Als Arbeitsinstrument für Vielleser könnte das e-book stark an Bedeutung gewinnen, aber es dürfte auch den Prozess der Entfremdung vom Buch beschleunigen. Schon heute können wir an jeder Universität beobachten, dass immer mehr Studierende nur noch jene Texte wahrnehmen, die ihnen online an ihrem elektronischen Arbeitsplatz zur Verfügung stehen.“ Dass Heideggers Werke als e-book erscheinen könnten, schließt Klostermann nicht aus: „Wenn das Medium wirklich mit Macht ruft, können wir nicht abseits stehen.“

Auch das Lesezeug arbeitet an unseren Gedanken mit

Angeblich will Amazon für Studierende und alle Leser von Fachbüchern schon bald einen größeren Kindle anbieten, der Tabellen, Grafiken und Abbildungen aller Art besser darstellen kann. Allein schon die Volltextsuche, die der Kindle erlaubt, macht ihn für alle interessant, die mit Büchern arbeiten wollen. Aber Lesen ist etwas anderes als Suchen, das immer nur einen Ausschnitt, das Zitat als Fragment zum Ziel hat und den Lesevorgang selbst fragmentarisiert. Was aber bedeutet es eigentlich, wenn die seit Jahrtausenden eingeübte Linearität des Lesevorgangs immer weiter aufgehoben wird? Muss nicht das konzentrierte Lesen, das Sichvertiefen in ein einzelnes Werk, irgendwann zum Ding der Unmöglichkeit werden?

Viele Beobachter glauben, dass sich das e-book vor allem im Bereich des Sachbuchs rasch Terrain erobern könnte. John Brockman und seine Frau Katinka Matson gehören zu den einflussreichsten Akteuren der amerikanischen Verlagswelt. Die Literaturagenten, die sich auf wissenschaftliche Publikationen und populäre Sachbücher spezialisiert haben, sagen der neuen Technologie eine große Zukunft voraus. Sie selbst lese zwar nach wie vor lieber in einem Buch, sagt Katinka Matson, aber der Kindle sei nun mal „viel praktischer, ein wirklich cooles Gerät: Ich kann im Bett liegen und mir jedes Buch aus dem Netz herunterladen.“ Brockman und seine Frau sind davon überzeugt, dass der Kindle unsere Lesegewohnheiten revolutionieren werde. „Aber Bücher müssen deshalb nicht anders geschrieben werden, und Autoren sollten den Kindle auch nicht bei der Konzeption ihrer Werke berücksichtigen.“

Wenn Nietzsches Satz, dass unser „Schreibzeug“ an unseren Gedanken mitarbeite, zutrifft, dann beeinflussen auch die Medien, mit denen wir lesen, den Prozess unserer Lektüre. Die umwälzenden Veränderungen, die mit der Erfindung der Schreibmaschine verbunden waren, kamen auf den hochgeknöpften Damenschuhen der Sekretärinnen daher, wie der Kulturhistoriker Bruce Bliven gesagt hat. Amazons digitales Lesegerät schleicht sich lautlos im Whispernet heran: Es ist das Buch, das aus dem Äther kam.

Stephen King horcht auf

Über die Internetverbindung Whispernet lässt sich innerhalb von sechzig Sekunden jedes im Kindle Store angebotene Buch herunterladen. Bislang soll sich überwiegend Unterhaltungsliteratur verkauft haben: Thriller und Titel der aktuellen Bestsellerlisten. Stephen King, mit einer Gesamtauflage von vierhundert Millionen Büchern einer der meistgelesenen Autoren der Welt, hat sich immer schon dafür interessiert, welche Möglichkeiten ihm neue Medien bieten. Vor acht Jahren hat er seine Kurzgeschichte „Riding the Bullett“ komplett zum Download ins Netz gestellt, bevor sie als Buch erschien.

Jetzt hat er für seine Fans, denen er gern als „Uncle Stevie“ gegenübertritt, Amazons Lesegerät einem Test unterzogen. Sein Fazit: Der Kindle leistet Lesern gute Dienste und wird sich deshalb durchsetzen. Aber wird er jemals das gedruckte Buch verdrängen können? Kings Antwort: „Nein. Die Unwandelbarkeit des gedruckten Buchs unterstreicht die Bedeutung der Ideen und Geschichten, die wir darin finden. Erst das Buch verleiht dem flüchtigen, fragilen Medium Dauer und Stabilität.“ Dennoch habe er sein Leben lang die Auffassung vertreten, dass die Geschichte, die erzählt wird, wichtiger sei als das System, das sie transportiert, den Autor eingeschlossen. Es ist interessant, dass King in diesem Zusammenhang ausdrücklich die Vorzüge des Hörbuchs gegenüber dem gedruckten Buch hervorhebt, als wolle er darauf hinweisen, dass die anthropologische Konstante, die besagt, dass Menschen einander Geschichten erzählen, älter sei als die Schrift und diese durchaus überdauern könne.

Entscheidend für jeden Schriftsteller

Felicitas Hoppe hat soeben einer sehr alten Geschichte einen neuen Weg gebahnt. Ihr jüngstes Buch unternimmt das Wagnis, ein achthundert Jahre alte Versepos Hartmann von Aues für unsere Zeit neu zu erzählen. „Iwein Löwenritter“ ist als Auftakt einer neuen Kinderbuchreihe des S. Fischer Verlags erschienen. Die Bücher sind leinengebunden, fadengeheftet und mit aufwendigen Illustrationen versehen. „Wir möchten bibliophile Bücher für bibliophile junge Leser machen“, heißt es beim Verlag.

Der kühne Sprung in eine bislang unbewohnte Nische des Jugendbuchmarkts soll das Gespür junger Leser für die ästhetische Schönheit des Buches wecken. Gleichwohl hätte Felicitas Hoppe nichts dagegen, wenn „Iwein Löwenritter“ als e-book erschiene - solange die gedruckte Ausgabe nicht dadurch verdrängt werde: „Ob ein Werk gedruckt wird oder nicht, ist entscheidend für jeden Schriftsteller. Denn es ist das Buch als Objekt, das in unseren Augen dem Text seinen Wert verleiht. Ich glaube nicht, dass sich daran jemals etwas ändern wird.“

Schon gibt es Einbruchsversuche im geschlossenen System

Aber spricht nicht doch manches dafür, dass das e-book dem unendlich differenzierten Buchmarkt ein neues Dreiklassenmodell überstülpen wird? Vielleicht wird es schon in wenigen Jahren Titel geben, die nur als e-book erscheinen, andere werden in digitaler und gedruckter Form angeboten werden, und die dritte Gruppe könnte aus besonders schön gestalteten Büchern bestehen, die den Reiz der Exklusivität nicht zuletzt daraus ableiten, dass sie eben nicht im Netz verfügbar sind. Dann würde das e-book das Buch entzaubern und gleichzeitig zu seiner Reauratisierung beitragen.

Noch vermag niemand zu sagen, wie und in welchem Maße das e-book unsere von Büchern geprägte Welt verändern wird, inwieweit es Lesegewohnheiten oder den Lesevorgang selbst zu beeinflussen vermag. Wie werden Kinder auf das neue Medium reagieren? Amazon hat diese Zielgruppe offenbar bereits fest ins Visier genommen: Die für das nächste Jahr angekündigte verbesserte Standardversion des Kindle soll über einen Farbbildschirm verfügen und so die Attraktivität des Produkts für junge Leser erhöhen. Und wie wird die Auseinandersetzung um das unverhohlene Monopolstreben des weltweit größten Internetbuchhändlers enden?

Während Sonys Portable Reader und der iLiad die gängigen e-book-Formate wie PDF wiedergeben, akzeptiert der Kindle nur Amazons eigenes Format und errichtet somit ein geschlossenes System, das andere Anbieter ausschließt und den Kunden gängelt. Dessen Weg zur scheinbar schrankenlosen neuen Bücherwelt soll ausschließlich durch das digitale Nadelöhr des Kindle Stores führen. Was dort nicht angeboten wird, ist für Kindle-Nutzer nicht erreichbar und führt kein digitales Leben. Anleitungen zum Knacken des Kindle-Codes werden auf einschlägigen Websites denn auch bereits diskutiert.

Die Flügel des Schmetterlings

Nicht nur der Ökonomie des Buchmarkts steht Veränderung bevor. Auf dem langen Weg der Profanisierung des geschriebenen Wortes droht eine unerhörte Zäsur. Das Evangeliar des Augustinus von Canterbury, eine oberitalienische Handschrift des sechsten Jahrhunderts, wurde über Jahrhunderte hinweg nicht in der Klosterbibliothek, sondern wie eine Reliquie auf dem Altar der Kirche selbst aufbewahrt. Das fünfzehnte Jahrhundert feierte den Buchdruck als Divina ars, als göttliche Kunst, den Menschen geschenkt, damit das Wort Gottes rascher Verbreitung finde. Doch schon um 1550 setzte die Klage ein, die Massenproduktion der Drucke beschädige das Arkanum, das die seltenen und kostbaren Handschriften des Mittelalters umgeben hatte.

Der Kindle hat nicht Trivialisierung zum Ziel, sondern das Verschwinden des Buches als sinnlicher Gegenstand, der riecht, altert und sich anfassen lässt. Die Verehrung des Buches hat ihre tiefsten und ältesten Wurzeln in der Religion, und die Prozesse der Aufklärung und Säkularisierung haben diese Verehrung nicht zerstört, sondern ihr weitere Wurzeln wachsen lassen. Wer heute Amazons Lesegerät zur Hand nimmt, ist in den ersten Stunden und Tagen dieser Erfahrung beeindruckt von den technischen Möglichkeiten dieses Geräts. Tritt er danach wieder an sein Bücherregal, wird ihm die Aura des Buches so zart und verletzlich erscheinen wie der Flügel eines Schmetterlings

The question is as maddening as it is quadrennial: How do the amoeba middle, the undecided, the independent, the low-information voters make up their minds?

What on earth goes through their brains?

We’re not talking about the partisans, the roughly 80 percent of voters who lean toward one party or the other and will generally go that way, assuming the candidate meets a reasonable threshold.

We’re talking about the other 20 percent. Some of these people like to guard their independence — studying issues, weighing candidates’ resumes and proposed solutions. That’s only a tiny percentage of the 20 percent, though. For the most part, this group doesn’t know much about public policy, or its knowledge is instinctual, with basic hard-wired ideas of justice.

This is not meant to be an insult. They’re busy. They hate politics. Can’t blame them.

“The decisive bloc of voters may indeed be people who don’t follow issues, so character may matter quite a bit,” said Michael McDonald, an expert on voter behavior at the Brookings Institution and George Mason University.

Within this context of “character,” how do voters make up their minds?

There are many theories, none conclusive.

“Why People Vote Republican,” a recent essay by University of Virginia psychologist Jonathan Haidt, offers some clues, tying “why” to the origins of morality.

He posits that liberal-leaning Americans tend to subscribe to social contract ethics: You and I agree we’re equals, and we won’t get in each other’s way. The basic values: fairness, reciprocity and helping those in need.

Social contract liberals tend not to care so much about victimless crime.

Traditional morality, however, arises out of a more ancient need to quell selfish desires and thus create strong groups, Haidt argues. Its adherents do this by demanding loyalty to the group, respecting authority and revering sanctified traditions, symbols, etc.

People often fall into one or the other category, and a candidate who speaks to these instincts will win over these voters.

Democrats who disrespect or fail to understand the second type of morality — the morality of sanctified symbols, authority and loyalty to group — do so at their peril, as when Sen. Barack Obama talked about bitter Americans clinging to God and guns.

Other theories:

University of California, Berkeley, linguist George Lakoff thinks conservatives are more aware of the importance of metaphor and language, and thus frame political debates to their advantage. So, for example, President Bush proposed “tax relief,” which made the current tax structure seem like an affliction. Who could oppose that? Examples are endless.

New York Times columnist David Brooks pointed this year to Princeton University psychologist Alexander Todorov, who claims he can predict the votes of 70 percent of test subjects by their facial reactions when seeing a candidate for the first time.

Other theorists think voters have an emotional response to candidates, and then create post-hoc rational reasons for supporting a candidate. They personally and emotionally like Arizona Sen. John McCain, and then come up with some reason: He’s against earmarks.

Brookings’ McDonald tends to work in the other direction. He thinks issues do matter, and quite a lot. In his view, people make a determination about whether they agree or disagree with the candidate on issues, and then fill in character blanks.

Such as: Candidate X wants to cut taxes, and I want a tax cut. Suddenly Candidate X seems more like a someone I’d like to have a beer with, as the (in)famous saying goes.

Or, Candidate Y wants to raise taxes, and suddenly the voter starts to think the candidate is a wine-swiller who’s out of touch with his values.

In this determination, I fill in character traits based on whether I agree or disagree with the candidate on the issues.

But what if I have little or no information on the candidate and his issues?

Now we’re back to that 20 percent of voters, and again trying to figure out what moves them.

Or as McDonald put it: “The dirty little secret of American politics is that the least informed decide the winner.”

Sun reporter Joe Schoenmann contributed to this story.

In his latest podcast (or as he insists on calling it – podgram) Stephen Fry speaks, among other things, about the “soul danger” that every blogger or columnist faces. A podcast incidentally, is a sort of radio programme available on the net that you can download and listen to at your leisure. Anyway, Fry believes that the “soul danger posed by having one’s own space – whether blog or column – is that it just turns you into a sort of prating imbecile, an overweaningly proud person ... like a columnist who thinks he has a right to yell his furies to the world”.

That was my transcript by the way so any mistakes are my own. In any case I share Stephen’s worries completely. Every time I sit down to type my excessively long column, I am burdened by the thought that after all this is just a collection of thoughts by someone who may very well be perceived as a pompous ass – and if Stephen Fry has these disquisitions, then I definitely should be doing some worrying myself.

I worry all the more today since the subject up for discussion is not a light one. Events of the week have dictated that without any ounce of doubt, I am to talk about such enormous subjects as why we are here and how we are supposed to make the best of it by concocting the best way to live happily together. Only a pompous ass could take on the subjects of philosophy, particle physics, ethics and morality all in one go. A pompous ass or a politician... but that is another story.

The big issue

First for the number lovers: 800 million particle collisions a second, 27 kilometres of tube, 4.4 billion protons, walloping into each other at 99.9 per cent of the speed of light in temperatures 100,000 times hotter than the sun. That, my friends, is what is going on at CERN – that’s the European Centre for Nuclear Research (and no they’re not dyslexic, they’re just French speaking). The scientific world has got ever so closer to find out the meaning of life. Or so they tell us bombastically when all they are doing (pardon the understatement) is looking for antimatter, hidden matter, anti-gravity and the things that make up 95 per cent of our Universe, which we don’t know anything about.

The way the press has put it, it seems that the CERN machine – the Large Hadron Collider – is our Toto who is rushing behind the curtain to discover that the mighty Wizard of Oz is just an old man showing off behind a load of contraptions. For the more controversial aspect of the issue read: we might be about to discover whether God exists. They just love that assertion. The scientific community isn’t particularly bothered with the deistic part of the equation – most of them have solved that equation ages ago and are more bothered with $100 dollar bets as to whether Higgs boson exists. (Trust me, just look it up and read about it... I could not explain it if I wanted to).

Yep. Stephen Hawkins and Peter Higgs have a bet going. Hawking bets Higgs is wrong and Higgs thinks that the genius on wheels is talking rubbish. The setting for this bet is a rather expensive piece of equipment worth many, many billions of whatever currency you fancy. Its main function is to make stuff collide. Protons actually. Because by colliding protons you get to see what they are made of. Don’t you just love the scientific method? How do you discover the stuff of life? Just bang a lot of it together in a rather expensive manner. Who said science wasn’t fun?

So boo to you Father Rice (that’s my school science teacher)... I was right after all when I smashed that test tube... this too is science. Just like the inquisitive toddler intent on discovering the primary function of whatever he lays his hands on, our scientific community is busy banging stuff together. Only there is a minor hitch. Or worry. Let’s say it could be major.

Black holes

Well, how shall I put it? The experiment comes with a bit of a warning. Some scientists have speculated that since what we are in essence doing is reproducing THE original big bang, albeit in a miniature version, one very possible side effect is the creation of a black hole. Now I do not know what you think but I am not very comfortable with the idea of an all-devouring orifice suddenly materialising (or de-materialising?) on the Franco-Swiss border. Quite frankly I think it sucks.

To be fair there seems to be an infinitesimal chance of what I described ever happening, and one of the 9,000 scientists drooling over the banging experiment thingy assures the world that the worst that can happen is a minor explosion and the $3.8 billion dollar tunnel and equipment suddenly become rubble. I’d hate to be there if it happens. Considering that the human race is currently many steps closer to answering questions like “What is the world made of?” “Why are we here?” and “Bovril or Marmalite?”, it is surprising that not enough fuss is being made about it all.

Or should I say that the wrong kind of fuss is being made in some quarters? “Wrong”, of course, depends on your particular perspective, but when you get people commenting (it’s them again) that that kind of money would have been better spent saving the poor of the world, you begin to wonder whether you are really pompous or whether you are definitely sitting on the right side of the wrong/right demarcation line. It’s not just us you know. There have been varying levels of reactions in the world: from the German schadenfreude (in Bild), which seemed rather unruffled by the potential end of the world situation, to the mass frenzy in the Indian subcontinent.

It seems that the Indian media is to shoulder much of the blame for reporting the event in “End of the World Is Nigh” fashion. A teenage girl in India went as far as killing herself by ingesting pesticide – worried that the world was coming to an end on 10 September. The cynic inside me leads me to say that she was right ... in a way... and maybe that is what it’s all about. The rumour doing the rounds on the net is that Nostradamus predicted that Geneva would be a good place not to be around in at this time. Superstition and conspiracy theories abound. The line between reason and blind faith is being tested as we speak.

I was particularly intrigued by the fact that in a UK Times survey of reactions in different countries, it was the Kenyan caretaker who reflected the Maltese mentality most. He expressed bewilderment when it was explained to him that scientists were seeking to answer the eternal question of how the Universe started. “This seems a very strange thing to spend money on... we could use that money in a much better way”.

Judas’ problem

It reminded me of the Judas and Jesus episode – which is well illustrated in that wonderful musical Jesus Christ Superstar. You know the one I am talking about – when Jesus was getting his tired feet rubbed with an expensive balm and Judas rebukes him for not having spent the money for the balm on a better cause. There may be no point to my quoting this particular story – I may even get someone telling me that even the devil can quote scriptures for his devilish purpose – but there is a common thread to all this.

The whole LHC issue has brought to the forefront of discussion the whole Religion v. Science debate. What business have we to question the work of the blind watchmaker? Shouldn’t science be devoting time and money to such things as that elusive cure for cancer? Well it should... and it is. This week’s Economist headlines the great strides being made in that field, particularly with regard to identifying the source of the problem as lying with stem cells. What the “religious” crowd fail to fathom is that this is not all about them. It is about making advances in what we are about and maybe... just maybe, that this too could be a step towards an important discovery that makes our lives better.

Right about at the same time as all this is going on, there is a parallel debate in the UK about whether Creationism should be included in science class. Until now the recommended course of action has been to refer to religion class for that sort of discussion while explaining that creationism is not of itself scientific. To my mind it should be a no brainer for believer and unbeliever alike. After all creationism requires faith – science requires proof. They are two legitimately different compartments that can only be mixed at the users’ peril. The moment you require proof from a believer or faith from a scientist you have breached the barrier of non-communicative disagreement.

The values of life

That leads me to the other big issue for me this week. Admittedly it has not been in the news but my meanderings on the net led me to an extremely interesting article by Jonathan Haidt – a researcher on psychology and emotion. In his article “What makes people vote Republican?”, Haidt examines the divide between liberals and conservatives and the values they hold to heart. It is an eye-opener for righteous liberals like myself who tend to believe that a conservative position is rooted in narrow-minded blindness.

What Haidt concludes is that the value structure of liberals is radically different from that of conservatives (duh!). In essence, conservatives value norms because they provide stability. Whether those norms emanate from socially developed values or from belief systems, the inherent stability they provide when everybody adheres to the system make them something worth fighting for.

For millennia, the guiding light for a large number of societies has been the same source such as religious belief. This leads to society being seen as an entity in itself that values its very integrity and identity of the collective. Look back at ancient norms – just open your Bible at Leviticus and you will find rules about menstruation, who can eat what and who can have sex with whom. Oftentimes rationality is not the basis of these laws but they provide comfort and a set of guidelines to live “safely”.

Liberals and libertarians would do well to understand the importance of this set of values to the conservative elements of society. Whenever they call for diversity and individual rights they should also understand why a conservative feels threatened by this sudden deviation from the norm. Which is not to say that the liberals or conservatives are right. But when you have an Archbishop speaking from the pulpit and confusingly throwing divorce, abortion and euthanasia in the same basket in order to put the fear of God into you, it helps to know where he is coming from.

Surely his eminence understands that not everyone in society has chosen his code of conduct as a guideline for how to live happily. Surely he knows that his idea of what is best for society does not necessarily coincide with the idea of happiness of the entire population. Surely his confusing presentation of lumping completely different arguments in one basket was a cheap shot at what he considers as grave threats to his preferred set of social values.

Live and let live

Black holes, divorce and values. It’s a tough one this week. I must admit that it has been difficult to handle, summarise and/or provoke. I invite you all to my blog to continue with the discussion. Bear in mind that the basic tenet in J’accuse is respect for your interlocutor. For the basic answer to the questions of the universe may be 42, you may be liberal or conservative but as I remind my readers occasionally... it’s my blog... and I cry if I want to.

This has been J’accuse, in the words of another blogger... thinking so you don’t have to!

Jacques blogs daily on http://jaccuse.wordpress.com. We will still be taking comments, at least until the black hole gobbles us all up.

Psychologist Jonathan Haidt has a long piece titled “What Makes People Vote Republican” onEdge.org. Don’t be put off by the whiffs of liberal-intellectual snobbery in Haidt’s opening remarks. He has interesting things to say; and the follow-up discussion is very good. Snobbery-wise, Roger Schank takes the palm at the end of those follow-ups:

The Haidt article is interesting, as are the responses to it, but these pieces are written by intellectuals who live in an environment where reasoned argument is prized. I live in Florida.

“You can stand on my wagon, if you want.”

I tend, when I’m not in big crowds, to forget that I’m short. In Republican crowds, I find, I feel particularly small.

And dark. And unsmiling. And uncoiffed, unmade-up and inappropriately dressed.

For the McCain/Palin rally in Fairfax, Va., on Wednesday, the organizers had asked people to wear red. I – unthinkingly – had dressed in blue, which was somewhat isolating.

I was isolated, too, because, unable to find the press area in the crowd of about 15,000, I was out with the “real” people. Which meant that I could hear everything from the podium and from the onlookers around me, but could see nothing, not, at least, until the mom beside me stopped struggling to balance atop her Little Tikes wagon with two toddlers in her arms and another screaming at her feet, and offered me a go at the view.

(“It’s Sarah. Sarah’s going to be the vice president,” she had told the little girls, clad in their matching polka dot dresses. “Sarah Palin.”)

She was a nice woman. She told me history was in the making. She told me where to get lunch. She handed me back my reporter’s notebook when one of her almost-two-year-old twins, fixing me with a dark look of mistrust, took it away. “Liberal media, eh?” her solemn eyes glared. “Well, watch what you say about my mommy and Our Sarah.”

Do not think for a moment that I was being paranoid.

Fred Thompson had warmed up the crowd, his familiar old district attorney’s voice restored to full bombast, and he’d been in fine form, denouncing – to loud boos from the crowd — the “lawyers and scandal mongers and representatives of cable networks” (boos from the crowd) who were at that very moment descending upon Alaska looking for dirt on their Sarah.

“I hope they brought their own Brie and Chablis with them,” he’d said, to raucous laughter, as I willed myself to disappear, remembering, with a shudder, that my children had demanded Brie for breakfast only that morning.

I should have been finding this funny. My whole plan, after all, had been to write something funny this week about the whole Sarah Palin phenomenon. I’d arrived at an if-you-can’t-beat-’em-laugh-at-’em kind of a juncture, I suppose.

I’d planned to make attending the McCain/Palin event a silly sort of adventure. I’d invited a friend who has six kids to come with me. I figured funny things were bound to befall us in Palin-Land, where, collectively, we’d have eight children between us (a funny thought in and of itself.) A Harold and Kumar Escape from the Barracuda sort of storyline was the idea – until my friend, done in by one too many sleepless nights, declined to accompany me, and I had to venture off alone.

And, forced to make new friends on the spot, discovered that the Palin Phenomenon is no laughing matter.

Those who think that it is — well, as Thompson warned on Wednesday, “they’ve got another thing coming.”

I made my first friend on the shuttle bus that took us from a nearby mall, where we’d been instructed to park, to the field where the rally was held. She was from Leesburg, Va., an ardent McCain supporter, conservative and self-described “soccer mom,” who grew up in Pennsylvania among girls who went hunting with their Dads.

Sarah Palin, she told me, “just seems like a regular person.”

I did not argue with her. One does not argue when making new friends. And besides, we had so many other things to bond over. We talked about kids with issues. She had a son with A.D.H.D., cousins with Asperger’s and dysgraphia, and a nephew with autism. (“They’re lucky they live in New Jersey. New Jersey’s pretty progressive,” she said.)

We talked about the moral vacuity of modern parenting. “I see extreme spoiling, self-absorption,” she said. “Constant bringing the kids up to love themselves without reflecting on how they affect others.” We talked about the disastrous lack of respect that children now show adults and institutions, and about the ways this lack of respect translates into a very ugly sort of lack of decorum and a lack of basic manners: “This 10-year-old, my daughter’s friend, she comes over and throws down a magazine with John McCain on the cover. ‘Here’s friggin John McCain,’ she says. ‘Let’s see what lies he’s going to tell now.’” She continued: “These 10-year-olds think they’re better than me. That they don’t have to say hello. That they think I’m beneath them.”

You go girl, I was thinking, in so many words, until the talk turned back to politics: “So often these kids that are so incredibly full of themselves, I find their parents are Democrats. The Democrats, they hate ‘us,’ the United States, but they love ‘me,’ that is, themselves,” she said.

I heard a lot more talk that day about the need for respect – and about arrogance and selfishness and about Democrats and liberals who think way too highly of themselves.

Fred Thompson on the liberal media: “This woman is undergoing the most vicious assault … all because she is a threat to the power they expected to inherit and think they’re entitled to.”

Businessman Scott Maclean on the Democratic Party: “Their attitude is: you don’t get it and they don’t expect you to get it because they’re smarter than you – and I hate that.”

I heard, repeatedly, a complaint about sterile individualism, about selfishness and the desire for a revalidated “us” – from John McCain’s boilerplate attack on “me-first Washington” to this curious reflection, from a mother of nine, on the field with eight of her children, on the question of whether she, like Palin, could ever imagine balancing the demands of her large family against a high-profile political career like Sarah’s.

“My daughter asked me, ‘Mom, would you do that if you had the opportunity?,’” she recalled, as the six-year-old in question looked on. “I said ‘I don’t know. Maybe she was born to do that. Maybe that’s the sacrifice she has to make to serve her country.’”

The daughter lifted high her hand-painted, flower-adorned Palin sign.

“She’ll really be a big step forward for women,” the mother said.

No, it wasn’t funny, my morning with the hockey and the soccer moms, the homeschooling moms and the book club moms, the joyful moms who brought their children to see history in the making and spun them on the lawn, dancing, when music played. It was sobering. It was serious. It was an education.

“Palin Power” isn’t just about making hockey moms feel important. It’s not just about giving abortion rights opponents their due. It’s also, in obscure ways, about making yearnings come true — deep, inchoate desires about respect and service, hierarchy and family that have somehow been successfully projected onto the figure of this unlikely woman and have stuck.

For those of us who can’t tap into those yearnings, it seems the Palin faithful are blind – to the contradictions between her stated positions and the truth of the policies she espouses, to the contradictions between her ideology and their interests. But Jonathan Haidt, an associate professor of moral psychology at the University of Virginia, argues in an essay this month, “What Makes People Vote Republican?”, that it’s liberals, in fact, who are dangerously blind.

Haidt has conducted research in which liberals and conservatives were asked to project themselves into the minds of their opponents and answer questions about their moral reasoning. Conservatives, he said, prove quite adept at thinking like liberals, but liberals are consistently incapable of understanding the conservative point of view. “Liberals feel contempt for the conservative moral view, and that is very, very angering. Republicans are good at exploiting that anger,” he told me in a phone interview.

Perhaps that’s why the conservatives can so successfully get under liberals’ skin. And why liberals need to start working harder at breaking through the empathy barrier.

Die Internetzeitschrift " Edge" versammelt in einer legendären Serie Beiträge der renommiertesten Wissenschaftler der Welt - und stellt ihnen unter anderem die Frage: Was halten Sie für wahr, ohne es beweisen zu können? SPIEGEL ONLINE präsentiert ausgewählte Antworten.

Ich möchte hier auf einen Vorschlag zurückgreifen, den ich bereits 1991 gemacht hatte: Eine dritte Kultur, "bestehend aus den Wissenschaftlern und sonstigen empirisch orientierten Denkern, die mit ihrer Forschungsarbeit und ihren begleitenden Schriften die Rolle der traditionellen Intellektuellen übernehmen, den tieferen Sinn unseres Lebens sichtbar zu machen und neu zu definieren, wer und was wir sind". Das rapide Wachstum des Internets erlaubte es 1997, der dritten Kultur eine eigene Heimat im Netz zu schaffen, mit einer Web-Seite namens Edge .

Edge ist ein Tummelplatz für die Ideen der dritten Kultur und zeigt die neue Gemeinschaft von Intellektuellen im regen Austausch. Die Beteiligten können dort nicht nur eigene Projekte und Ideen vorstellen, sondern auch die anderer Denker der dritten Kultur kommentieren. Das geschieht bewusst im Geist der kritischen Auseinandersetzung, und daraus ergibt sich eine rigorose, unter Hochspannung geführte, scharfe Diskussion über Grundfragen des digitalen Zeitalters, bei der "eine elegante Argumentation" mehr zählt als tröstliche Weisheit.

Edge präsentiert spekulative Ideen, erkundet Neuland auf den Gebieten Evolutionsbiologie, Genetik, Informatik, Neurophysiologie, Psychologie und Physik. Zu den in diesem Rahmen gestellten Grundfragen gehören: Wo liegen die Ursprünge des Universums? Wo die des Lebens? Und wo die des Geistes? Aus der dritten Kultur gehen neben einer neuen Naturphilosophie auch neue Denkweisen hervor, die viele unserer Grundannahmen darüber in Frage stellen, wer wir sind und was es bedeutet, ein Mensch zu sein.

DIE EDGE-FRAGE

Eine Sparte von Edge ist The World Question Center, ein 1971 von meinem Mitarbeiter und Freund, dem in Ägypten verstorbenen Künstler James Lee Byars, eingeführtes Projekt der "Begriffskunst". Ich hatte Byars 1969 kennengelernt, als er nach dem Erscheinen meines ersten Buches, "By the Late John Brockman", auf mich zukam. Nicht nur lebten wir beide in der Welt der Kunst, sondern teilten auch das Interesse an Sprache, an den Verwendungsweisen der Frageform und an "den Steins": Einstein, Gertrude Stein, Wittgenstein und Frankenstein. Byars regte mich zur Idee von Edge an und erfand das Motto:

"Um die Grenzen des Wissens auszuloten, muss man die geistvollsten und interessantesten Menschen einladen und in einem Raum versammeln, um sie einander die Fragen stellen zu lassen, die sie sonst nur sich selbst stellen."

DER AUTOR

John Brockman ehemaliger Aktionskünstler, Herausgeber der Internet- Zeitschrift Edgeund Begründer der "Third Culture", leitet eine Literaturagentur in New York und hat bereits zahlreiche Bücher veröffentlicht, unter anderem: "Leben was ist das? Ursprünge, Phänomene und die Zukunft unserer Wirklichkeit" (Januar 2009).

John Brockman ehemaliger Aktionskünstler, Herausgeber der Internet- Zeitschrift Edgeund Begründer der "Third Culture", leitet eine Literaturagentur in New York und hat bereits zahlreiche Bücher veröffentlicht, unter anderem: "Leben was ist das? Ursprünge, Phänomene und die Zukunft unserer Wirklichkeit" (Januar 2009).Seiner Ansicht nach wäre es einfach töricht, dadurch zu einer Axiologie des gesellschaftlich vorhandenen Wissens gelangen zu wollen, dass man sich durch ganze Bibliotheken liest. (Er selbst besaß in seinem spärlich eingerichteten Zimmer immer nur vier Bücher gleichzeitig, die er in einer Kiste aufbewahrte und stets nach der Lektüre auswechselte.)

Er plante, die hundert besten Köpfe der Welt zusammen einzuschließen, damit sie "einander die Fragen stellten, die sie selbst beschäftigten". Als Ergebnis schwebte ihm eine Synthese allen Denkens vor. Doch lagen zwischen dem Plan und der Ausführung viele Fallgruben. Byars wählte seine hundert Kandidaten aus, rief sie an und fragte, welche Fragen sie sich stellten. Das Resultat: Siebzig von ihnen legten wortlos auf.

Doch 1997 hatten das Internet und die E-Mail gute Voraussetzungen dafür geschaffen, Byars' großen Plan zu verwirklichen, und das führte zur Einrichtung der Web-Seite Edge. Zu den ersten Einsendern gehörten Freeman Dyson und Murray Gell-Mann, die beide auch schon 1971 auf seiner Bestenliste gestanden hatten.

Für jede der acht Jahresausgaben von Edge habe ich mich selbst der Frageform bedient und Autoren gebeten, auf eine Frage zu antworten, die sich mir oder einem von ihnen mitten in der Nacht stellte. Die Edge-Frage von 2005 hatte der theoretisch ausgerichtete Psychologe Nicholas Humphrey vorgeschlagen:

"Große Geister gelangen manchmal zu Einsichten, bevor sie Beweise oder Argumente dafür haben. (Diderot bezeichnete diese seherische Gabe als den 'esprit de divination'.)

Was halten Sie für wahr, ohne es beweisen zu können?"

Diese Frage öffnete manch einem die Augen (der Sender BBC 4 Radio urteilte, sie wirke "auf phantastische Weise anregend ... stimuliert die denkende Welt wie ein Kokaincocktail"). In den hier gesammelten Antworten liegt der Akzent auf Bewusstsein, auf Erkenntnis, auf Ideen über Wahrheit und Beweis. Wenn ich ihr Gemeinsames benennen müsste, so würde ich sagen, dass sie einen Kommentar dazu bilden, wie wir mit einem Übermaß an Gewissheit umgehen. Wir leben im Zeitalter der Suchkultur, in der Suchmaschinen wie Google uns in eine Zukunft geleiten, in der eine Überfülle an richtigen Antworten mit entsprechend naiven Überzeugungen einhergeht.

Zwar wären wir in dieser Zukunft fähig, die Fragen zu beantworten – aber wären wir auch klug genug, sie zu stellen? Dieses Buch plädiert für ein anderes Vorgehen. Es könnte ja auch völlig in Ordnung sein, sich nicht ganz sicher zu fühlen, sondern nur eine Ahnung zu haben und ihr zu folgen. Wie Richard Dawkins, der britische Evolutionsbiologe, 2005 in einem Interview zur damaligen Edge-Frage sagte: "Es wäre völlig falsch anzunehmen, dass die Wissenschaft bereits alles weiß. Vielmehr tastet sie sich über Ahnungen, Vermutungen und Hypothesen voran, manchmal durch poetische oder gar ästhetische Einsichten inspiriert, und versucht dann, ihre Ideen in Experimenten oder Beobachtungen zu erhärten. Und darin gerade liegt die Schönheit der Wissenschaft – dass sie diese imaginative Phase durchlaufen muss, um anschließend zum Nachprüfen und Beweisen überzugehen."