Front Page

DEFGH Nr. 63, Freitag, 15. März 2019.

Collage: Stefan Dimitrov

The Ghost in the Machine

Artificial intelligence inspires wild fantasies, but remains hard to imagine. A SZ series creates clarity.

__________________________________________________

__________________________________________________

Artificial intelligence:

A new series brings science and culture together to fathom the inexplicable

_________________________________________________

The Overdue Debate

By Andrian Kreye

Friday, March 15, 2019

_________________________________________

The Spirit In The Machine

What does artificial intelligence mean?

A series of essays seeks answers.

Part 1

_________________________________________

John Brockman has long called for, and practiced, the unification of the natural sciences and humanities into what he has called “the third culture”. He is the founder of the debate forum Edge.org, where scientists, writers and artists work together to ask the great questions of the present. Since 1981, his "Reality Club" has held gatherings in New York, San Francisco or London in pubs, lofts, museums and living rooms. In 1996, he migrated the debate to Edge.org on the web, which led to his Edge dinners.

Because he has a full-time career as a literary agent for science authors, as well as being the first to negotiate major deals for books by bestselling academics such as Daniel Kahneman, Richard Dawkins or Lisa Randall, his roundtable was always already very prominently cast.

One can imagine the 78-year-old today as a mixture of King Arthur and Dorothy Parker. On the one hand, he often recognizes powerful talents earlier than others, which led to the fact that he had the Google founders Sergey Brin and Larry Page, Amazon boss Jeff Bezos or the boy-wonder of Facebook as guests at his dinner parties even when they were still precocious start-up entrepreneurs. On the other hand, he has the humor and charm to keep you on your toes at such events, whether in the back room of a restaurant, at workshop weekends on his farm near New York, or on his web pages.

So it was a rare “must” in the social life of Harvard University when he recently invited a large group to an evening event in Cambridge at the Brattle Theatre, followed by a dinner at the Charles Hotel to continue the debate on artificial intelligence, which he had presented as a "Possible Minds" project last fall. Many of those who attended are celebrated as stars in scientific circles—such as the cognition researcher Steven Pinker, the robot ethicist Kate Darling, the science philosopher Max Tegmark and as an eminence price, the writer and anthropologist Mary Catherine Bateson.

In the theater they delivered 5-minute Lightning talks (lectures), presenting a picture of the state of things as wide-ranging as anything currently running in the A.I. debate.

Mary Catherine Bateson began the evening with a call for the need to revive the almost forgotten science of systems theory in light of the complex networks of AI. The developmental psychologist Alison Gopnik asked that we remember that while four-year-olds are mentally immature, they’re fitter than any AI. Max Tegmark compared A.I. to a missile that requires precise control by humans.

And Stephen Wolfram was enthusiastic about the almost unlimited possibilities still beyond our understanding.

_________________________________________

John Brockman’s

Dinners are Today’s

"Roundtables"

_________________________________________

As a non-academic guest of the following dinner I realized how deeply connected the various disciplines of the humanities and sciences already are in places like Cambridge, MA. It felt like being at a great reunion, hugs and gossip included. John Brockman ruled the room like a social alchemist, taking some from the numerous groups adding them to another to spark new conversations. One could learn about the latest breakthroughs in science or about how projects like AI enhanced democratic processes neutralizing lobbyists and empowering voters, or get a sense of greater trends like programmable cells (which might have an even greater impact on history than AI, especially if you talk to a biologist).

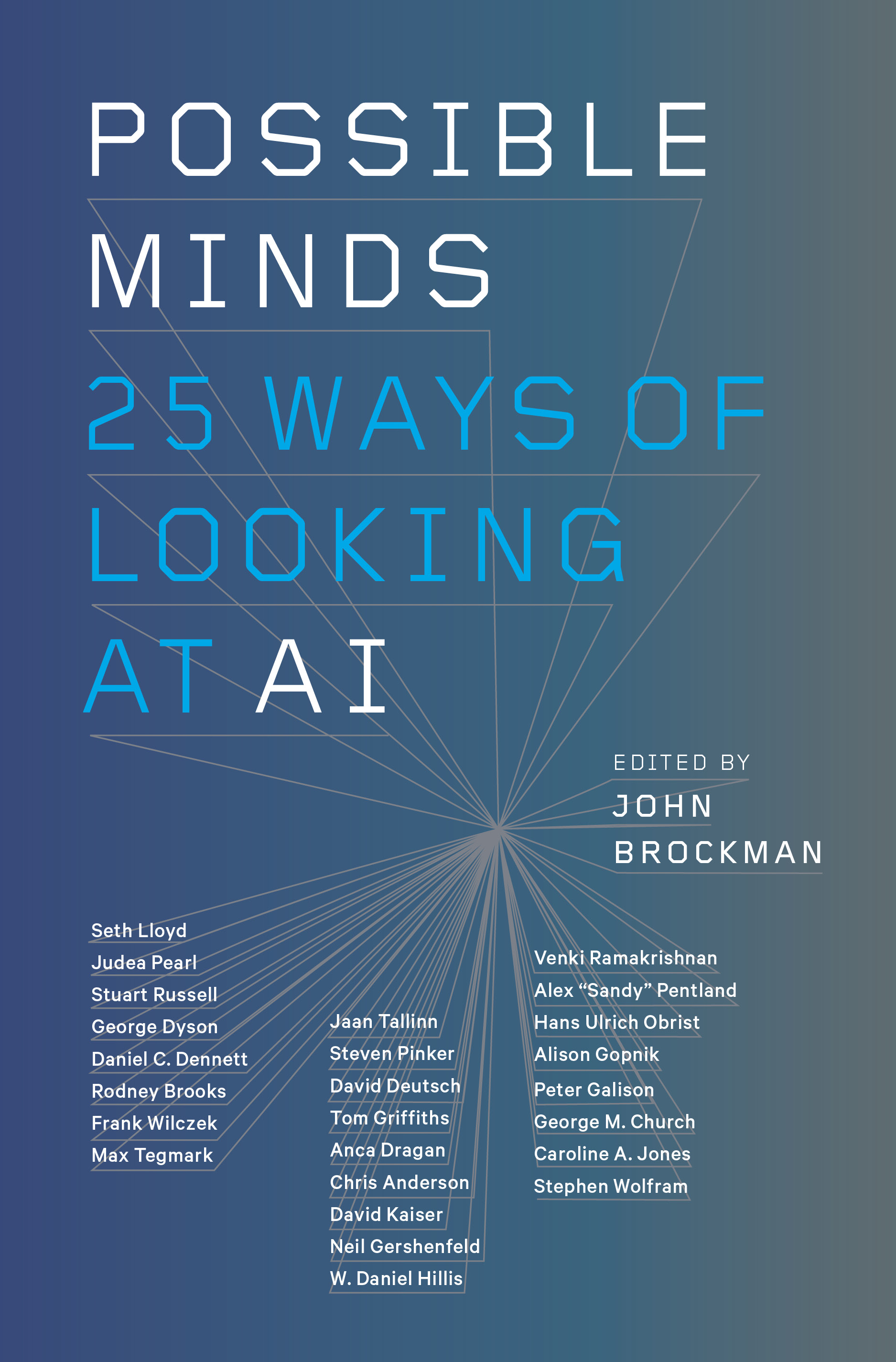

John Brockman's most recent roundtables in artificial intelligence has resulted in an essay collection. Authors and scientists such as the geneticist George Church, Alison Gopnik, the art historian Caroline Jones, Steven Pinker and the Nobel laureate and president of the Royal Society Venki Ramakrishnan have joined the debate about artificial intelligence. The Süddeutsche Zeitung will make the essays available to the public in the coming weeks, publishing them in the Feuilleton.

We will also invite, and publish in our pages, the first reactions of German and European intellectuals, cultural figures, and natural scientists. This will be the beginning of a debate the end of which is not in sight.’

Andrian Kreye is the Feuilleton Editor of Süddeutsche Zeitung. © Süddeutsche Zeitung GmbH, Munich. Courtesy of Süddeutsche Zeitung Content.

Journalist Andrian Kreye (Süddeutsche Zeitung) wins Theodor Wolff Prize in "Topic of the Year" Category for Artificial Intelligence Series

June 26, 2019

The Spirit of Unlimited Possibilities

Cage's dinner parties were an ongoing seminar on media, communication, art, music and philosophy, driven by Cage's interest in the ideas of Wiener, Claude Shannon and Marshall McLuhan. Cage was particularly fascinated by McLuhan's idea that with the invention of electronic technologies we had externalized our central nervous system, that is, our mind, and that we had to assume that "there is only one mind that we all share. Such ideas were increasingly circulating among artists and intellectuals at the time. Many of these people were reading Wiener, and cybernetics was in the air. During one of those dinners, Cage reached into his briefcase and pulled out a copy Wiener's Cybernetics and handed it to me with the words: “This is for you." That was a key moment for everything I've done in the last 53 years.

During the festival, I received an unexpected phone call from Wiener’s colleague Arthur K. Solomon, head of Harvard’s Biophysics Department. Wiener had died the year before, and Solomon’s and Wiener’s other close colleagues at MIT and Harvard had been reading about the Expanded Cinema Festival in the New York Times and were intrigued by the connection to Wiener’s work. Solomon invited me to bring some of the artists up to Cambridge to meet with him and a group that included MIT sensory communications researcher Walter Rosenblith, Harvard applied mathematician Anthony Oettinger, and MIT engineer Harold “Doc” Edgerton, inventor of the strobe light.

New Technologies = New Perceptions

Like many other “art meets science” situations I’ve been involved in since, the two-day event was an informed failure: ships passing in the night. But I took it all on board and the event was consequential in some interesting ways—one of which came from the fact that they took us to see “the” computer. Computers were a rarity back then; at least none of us on the visit had ever seen one. We were ushered into a large space on the MIT campus, in the middle of which there was a “cold room” raised off the floor and enclosed in glass, in which technicians wearing white lab coats, scarves, and gloves were busy collating punch cards coming through an enormous machine. When I approached, the steam from my breath fogged up the window into the cold room. Wiping it off, I saw “the” computer. I fell in love.

I began to develop a theme, a mantra of sorts, that has informed my endeavors since: “new technologies = new perceptions.” Inspired by communications theorist Marshall McLuhan, architect-designer Buckminster Fuller, futurist John McHale, and cultural anthropologists Edward T. “Ned” Hall and Edmund Carpenter, I started reading avidly in the fields of information theory, cybernetics, and systems theory. McLuhan himself suggested I read Warren Weaver and Claude Shannon’s 1949 paper “Recent Contributions to the Mathematical Theory of Communication,” which begins: “The word communication will be used here in a very broad sense to include all of the procedures by which one mind may affect another. This, of course, involves not only written and oral speech, but also music, the pictorial arts, the theater, the ballet, and in fact all human behavior.”

Reality is a man-made process. The images we make of ourselves and our world are in part models rooted in the knowledge of the technologies we create. Although many of us weren't ready yet, after only a few years we looked at our brain as a computer. And when we connected computers to the Internet, we realized that our brain is not a computer, but a network of computers.

Basic text of the digital debate: Norbert Wiener's Cybernetics of 1948. Photo: SZ

Two years after Cybernetics, in 1950, Norbert Wiener published The Human Use of Human Beings—a deeper story, in which he expressed his concerns about the runaway commercial exploitation and other unforeseen consequences of the new technologies of control. I didn’t read The Human Use of Human Beings until the spring of 2016, when I picked up my copy, a first edition, which was sitting in my library next to Cybernetics. What shocked me was the realization of just how prescient Wiener was in 1950 about what’s going on today. Although the first edition was a major bestseller—and, indeed, jump-started an important conversation—under pressure from his peers Wiener brought out a revised and milder edition in 1954, from which the original concluding chapter, “Voices of Rigidity,” is conspicuously absent.

Science historian George Dyson points out that in this long-forgotten first edition, Wiener predicted the possibility of a “threatening new Fascism dependent on the machine à gouverner”:

No elite escaped his criticism, from the Marxists and the Jesuits (“all of Catholicism is indeed essentially a totalitarian religion”) to the FBI (“our great merchant princes have looked upon the propaganda technique of the Russians, and have found that it is good”) and the financiers lending their support “to make American capitalism and the fifth freedom of the businessman supreme throughout the world.” Scientists. . . received the same scrutiny given the Church: “Indeed, the heads of great laboratories are very much like Bishops, with their association with the powerful in all walks of life, and the dangers they incur of the carnal sins of pride and of lust for power.”

This jeremiad did not go well for Wiener. As Dyson puts it:

These alarms were discounted at the time, not because Wiener was wrong about digital computing but because larger threats were looming as he completed his manuscript in the fall of 1949. Wiener had nothing against digital computing but was strongly opposed to nuclear weapons and refused to join those who were building digital computers to move forward on the thousand-times-more-powerful hydrogen bomb.

Since the original of The Human Use of Human Beings is now out of print, lost to us is Wiener’s cri de coeur, more relevant today than when he wrote it sixty-eight years ago: “We must cease to kiss the whip that lashes us.”

It Is Time to Challenge the Prevailing Digital AI Narrative

Among the reasons we don’t hear much about cybernetics today, two are central: First, although The Human Use of Human Beings was considered an important book in its time, it ran counter to the aspirations of many of Wiener’s colleagues, including John von Neumann and Claude Shannon, who were interested in the commercialization of the new technologies. Second, computer pioneer John McCarthy disliked Wiener and refused to use Wiener’s term “Cybernetics.” McCarthy, in turn, coined the term “artificial intelligence” and became a founding father of that field.

Cybernetics, rather than disappearing, was becoming metabolized into everything, so we no longer saw it as a separate, distinct new discipline. And there it remains, hiding in plain sight.

Now AI is everywhere. We have the Internet. We have our smartphones. The founders of the dominant companies— the companies that hold “the whip that lashes us”— have net worths of $65 billion, $90 billion, $150 billion. High- profile individuals such as Elon Musk, Nick Bostrom, Martin Rees, Eliezer Yudkowsky, and the late Stephen Hawking have issued dire warnings about AI, resulting in the ascendancy of well-funded institutes tasked with promoting “Nice AI.” But will we, as a species, be able to control a fully realized, unsupervised, self-improving AI? Wiener’s warnings and admonitions in The Human Use of Human Beings are now very real, and they need to be looked at anew by researchers at the forefront of the AI revolution.

Here is Dyson again:

Wiener became increasingly disenchanted with the “gadget worshipers” whose corporate selfishness brought “motives to automatization that go beyond a legitimate curiosity and are sinful in themselves.” He knew the danger was not machines becoming more like humans but humans being treated like machines. “The world of the future will be an ever more demanding struggle against the limitations of our intelligence,” he warned in God & Golem, Inc., published in 1964, the year of his death, “not a comfortable hammock in which we can lie down to be waited upon by our robot slaves.”

Thus, it’s time to present the ideas of a community of sophisticated thinkers who are bringing their experience and erudition to bear in challenging the prevailing digital AI narrative as they communicate their thoughts to one another. The aim is to present a mosaic of views that will help make sense out of this rapidly emerging field.

Similar to the fable of the blind man and the elephant, AI is far too big a subject to be viewed from a single angle. The "Possible Minds Project," from which the essays in this series emerged, and are also published as CT, where some of the authors of these essays were also present.

Since then, I have hosted a series of dinners and discussions in New York, London, Cambridge, and the Serpentine Marathon on Artificial Intelligence, and major public events at London City Hall, The Brattle Theatre in Cambridge, The LongNow at SF Jazz, and Pioneer Works, in Brooklyn. Among the guests were natural scientists and humanists, communication theorists, science historians, philosophers and artists, all of whom have thought very seriously about artificial intelligence throughout their professional lives. It’s time to examine the evolving AI narrative by identifying the leading members of that mainstream community along with the dissidents and presenting their counter-narratives in their own voices.

The essays that follow thus constitute a much-needed update from the field.

[Adapted from Introduction, Possible Minds: 25 of Looking at AI, edited by John Brockman (Penguin Press, 2019)]

Collage: Stefan Dimitrov

[enlarge]

A Question of Faith

By George Dyson

Monday, September 23, 2019

Collage: Stefan Dimitrov

[enlarge]

A Dream of Objectivity

By Peter Galison

Saturday, August 10, 2019

Collage: Stefan Dimitrov

[enlarge]

Once Again With Feeling

By Alex "Sandy" Pentland

Wednesday, July 24, 2019

Collage: Stefan Dimitrov

[enlarge]

Will Computers Be Our Rulers?

By Venki Ramakrishnan

Tuesday, July 16, 2019

Collage: Stefan Dimitrov

[enlarge]

The Circle is Closing

By Neil Gershenfeld

Tuesday, June 4, 2019

Collage: Stefan Dimitrov

[enlarge]

Borders of Obscure Learning

By Judea Pearl

Wednesday, May 15, 2019

Collage: Stefan Dimitrov

[enlarge]

Unpredictable Human

By Anca Dragan

Tuesday, April 23, 2019

Collage: Stefan Dimitrov

[enlarge]

Meat and Blood Machines

By W. Daniel Hillis

Wednesday, April 10, 2019

Collage: Stefan Dimitrov

[enlarge]

Beings and Tools

By Daniel C. Dennett

Monday, April 1, 2019

Collage: Stefan Dimitrov

[enlarge]

The Wisdom of Children

By Alison Gopnik

Tuesday, March 26, 2019

[Adapted from Chapter 21 - "AIs Versus Four-Year-Olds" by Alison Gopnik, from Possible Minds: 25 of Looking at AI, edited by John Brockman (Penguin Press, 2019)]

Collage: Stefan Dimitrov

[enlarge]

What Is Intelligence Anyway?

By Steven Pinker

Wednesday, March 20, 2019